Severity

High

Analysis Summary

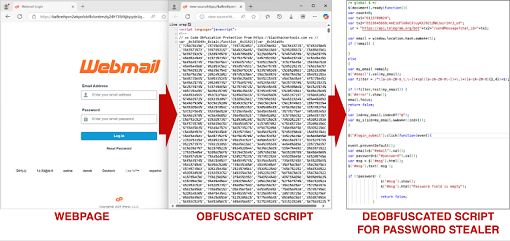

Cybersecurity researchers have identified a new trend where large language models (LLMs) are being used to generate new variants of malicious JavaScript code that can evade detection. While LLMs themselves may not create malware from scratch, they are effective at obfuscating and transforming existing malware to bypass security mechanisms.

These transformations which include techniques such as variable renaming and code reimplementation result in malware variants that retain their malicious functionality but are more difficult to identify even by advanced machine learning models and platforms like VirusTotal. This method can be used to degrade the performance of malware classifiers significantly increasing the scale of undetectable malicious code.

One significant concern is the potential for these obfuscated JavaScript variants to trick automated malware detection systems, according to the researcher, that LLM-generated variants were able to lower the malicious score of malicious JavaScript by up to 88%. LLM-based transformations are more natural compared to traditional obfuscation libraries which makes them harder to detect. Despite the security guardrails in place by LLM providers, tools like WormGPT have emerged to automate phishing email creation and malware generation highlighting the growing role of generative AI in cybercrime.

Meanwhile, cybersecurity threats also extend to hardware, with academics from North Carolina State University revealing a side-channel attack called TPUXtract targeting Google Edge Tensor Processing Units (TPUs). This attack capable of stealing model hyperparameters with near-perfect accuracy, could lead to intellectual property theft or further cyberattacks. The attack works by capturing electromagnetic signals during neural network inferences and exploiting the data to recreate a model's architecture and functionality. Though it requires physical access and expensive equipment it represents a significant risk for AI systems deployed in the cloud.

Additionally, AI frameworks like the Exploit Prediction Scoring System (EPSS) are vulnerable to adversarial attacks as demonstrated by Morphisec. Attackers can manipulate external signals, such as social media activity and public code availability to artificially inflate the perceived threat of certain vulnerabilities. By boosting the activity metrics of a security flaw the attackers can misguide organizations relying on EPSS scores for vulnerability management. This manipulation can lead to misallocation of resources with vulnerabilities incorrectly prioritized based on skewed exploitation predictions.

Impact

- Security Bypass

- Code Execution

- Gain Access

Indicators of Compromise

Domain Name

- bafkreihpvn2wkpofobf4ctonbmzty24fr73fzf4jbyiydn3qvke55kywdi.ipfs.dweb.link

- dweb.link

- dub.sh

- jakang.freewebhostmost.com

Remediation

- Block all threat indicators at your respective controls.

- Search for indicators of compromise (IOCs) in your environment utilizing your respective security controls.

- Update and improve existing detection systems to focus on behavioral analysis rather than relying solely on signature-based methods.

- Continuously retrain machine learning models on new, obfuscated malware variants to improve their ability to detect advanced threats.

- Employ a multi-layered approach to security, integrating heuristic analysis, anomaly detection, and threat intelligence to spot obfuscated malware.

- Ensure physical access to AI hardware is tightly controlled to prevent attackers from gaining proximity to edge TPUs.

- Implement electromagnetic shielding on devices to minimize the risk of side-channel attacks and prevent the leakage of sensitive data.

- Employ hardware security mechanisms such as trusted execution environments (TEEs) to secure AI model data and prevent model extraction.

- Be cautious about external signals such as social media posts or code repositories that can be manipulated to influence threat assessments.

- Use a combination of threat intelligence feeds, vulnerability scanners, and manual verification to validate the information provided by EPSS or similar systems.

- Enhance the robustness of AI models used in EPSS by filtering out unreliable data sources and preventing exploitation of vulnerabilities in the scoring system.